Robots.txt Generator

The Ultimate Guide for Website Owners & SEO Experts: Mastering Robots.txt

The sometimes-ignored file robots.txt, which is located in the root directory of your website, is essential to the way search engine crawlers handle your material. It serves as a kind of road map, directing these bots to the pages you want indexed and politely asking them to steer clear of places you would rather keep private.

Comprehending Robots.txt: An Introduction

Consider robots.txt as a guide that you provide to the virtual visitors of your website. It is a simple text file that instructs search engine crawlers on which pages to ignore and which ones to visit. This guarantees that search engines concentrate on indexing the most pertinent material and helps optimize the crawl budget for your website.

The Importance of Robots.txt for SEO

Although robots.txt has no direct effect on your search engine rankings, it can have a big impact on the general SEO health of your website. You can do the following by carefully deciding which pages are indexed and crawled:

Set Important Pages as a Priority: Make sure your most important material is crawled and indexed by search engines first.

Save Crawl Budget: Do not let crawlers spend time on irrelevant or duplicate pages.

Safeguard Private Information: Protect your website's confidential sections, like admin panels and user data.

Enhance User Experience: To help users load pages more quickly, try not to overload your server with too many crawl requests.

Creating or Modifying Your Robots.txt File

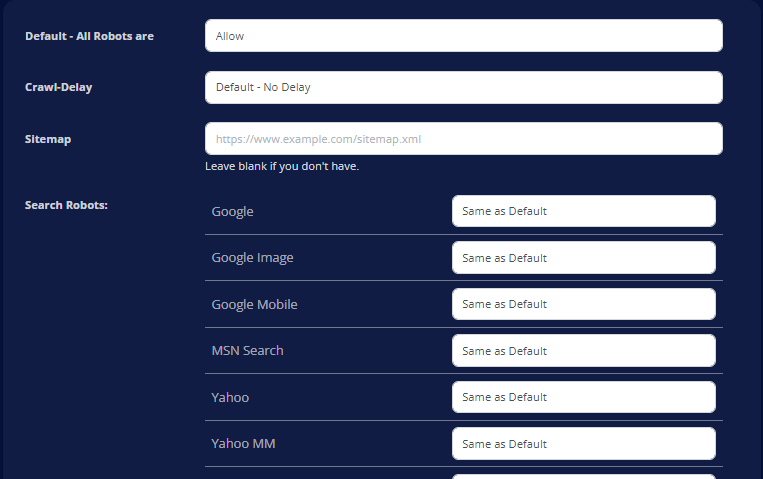

Do not worry if you are not experienced with coding! Online, there are numerous easy-to-use Robots.txt Generator programs. You do not need to write a single line of code to build or change your robots.txt file thanks to these tools, which streamline the process.

Important Robots.txt Instructions and Their Purposes

User-agent: Indicates which crawler (*, Googlebot, Bingbot, etc.) is covered by the following rules.

Permit: Allows access to particular folders or URLs.

Disallow: Limits access to particular folders or URLs.

Crawl-delay: Limits how quickly a crawler visits your website (to avoid overloading the server).

Sitemap: Gives search engines a way to quickly find and index your pages by providing a link to your XML sitemap.

What Separates a Sitemap from a Robots.txt?

Sitemaps and robots.txt are both important for search engine optimization, although they have different functions:

Robots.txt: Indicates on your website where crawlers are allowed and not allowed to go.

A sitemap gives search engines an extensive list of all the pages you want them to index.

Robots.txt Optimization for SEO Success

To optimize your robots.txt file, take into account following recommended practices:

Submit Your Sitemap: In your robots.txt file, include a link to your XML sitemap.

Prioritize Crawling: To make sure that search engines give priority to crawling your most important sites, use the Allow directive.

Stop Needless Crawling: To stop crawlers from reaching pages that are still under development, private regions, or duplicate content, use the Disallow directive.

Track Crawl Statistics: Check your robots.txt file and server logs frequently to see how spiders are engaging with it. You can also check Google Search Console.

Keywords to Remember

- robots.txt

- robots.txt generator

- robots exclusion protocol

- search engine crawler

- website indexing

- crawl budget

- sitemap

- SEO optimization

- allow directive

- disallow directive

Search engine optimization and smooth crawling and indexing of your website can be achieved by learning how to use robots.txt and using resources such as the Robots.txt Generator.

Popular Tools

Recent Posts